Xin (Eric) WangAssistant Professor, UCSB Computer ScienceDirector, UCSB Center for Responsible Machine LearningHead of Research, SimularEmail: ericxwang [at] ucsb [dot] edu |

|

Bio

Xin (Eric) Wang is an Assistant Professor in the Computer Science Department at UC Santa Barbara and the Head of Research at Simular. He also directs the UCSB Center for Responsible Machine Learning (CRML). His research interests include Natural Language Processing, Computer Vision, and Machine Learning, with an emphasis on Multimodal and Agentic AI. Previously, he was a faculty at UC Santa Cruz and worked at Google Research, Meta FAIR, Microsoft Research, and Adobe Research.Eric has served as (Senior) Area Chair for conferences such as ACL, NAACL, EMNLP, ICLR, NeurIPS, and ICML, and organized workshops and tutorials at those venues. He has received several awards and recognitions for his work, including Best Paper Awards from CVPR and ICLRW, Google Research Faculty Award, Amazon Alexa Prize Awards, JPMorganChase Faculty Research Award, Cisco Faculty Research Award, eBay Faculty Research Awards, AAII Interdisciplinary Research Award, and various gift awards/grants from Adobe, Apple, Amazon, Snap, Microsoft, OpenAI, Cybever, Orby, etc.

News

| [NEW!] | Three papers accepted to ICLR 2026! |

| [NEW!] | Check out the inaugunal CRML Agentic AI Summit at UCSB! |

| [NEW!] | Serving as Senior Area Chair for ACL 2026 and Area Chair for ICLR 2026 and ICML 2026. |

| [NEW!] | Agent S3 is released! The first computer use agent that surpasses human performance. |

| [NEW!] | Three papers accepted to NeurIPS 2025! |

| [NEW!] | Four papers accepted to EMNLP 2025! |

| [NEW!] | Invited Talks at Amazon and AI Agent Frontier Seminar. |

| [NEW!] | Our Agent S2 paper accepted to COLM 2025! |

| [NEW!] | Our VLM4D paper accepted to ICCV 2025! |

| [NEW!] | Invited Talks at IVADO Bootcamp and Workshop on Autonomous LLM Agents. |

| [NEW!] | Invited Talks at Apple, Cisco, Cresta, and Georgian. |

| [NEW!] | Keynote at SEMLA 2025. |

| [NEW!] | Huge congratulations to Dr. Xuehai He and Dr. Jing Gu on successfully defending their dissertations and graduating! Wishing you both the very best in your next chapter! |

| [NEW!] | Two papers accepted to ACL 2025! |

| [NEW!] | Our Agent S paper won the Best Paper Award at ICLR 2025 Agentic AI for Science! |

| [NEW!] | Serving as Senior Area Chair for EMNLP 2025 and Area Chair for NeurIPS 2025. |

| [NEW!] | Agent S2 is released! The best computer use agent with new SOTA on OSWorld, WindowsAgentArena, and AndroidWorld. |

| [NEW!] | Keynote Talk at the Large Model Safety Workshop. |

| [NEW!] | Invited Talk at Adobe Research, ServiceNow Research, Apple, and eBay. |

| [NEW!] | Four papers accepted to ICLR 2025! |

| [NEW!] | One paper accepted to NAACL 2025! |

| [NEW!] | Received multiple faculty research awards from eBay and Cisco. Thanks! |

| [NEW!] | Three papers accepted to EMNLP 2024! |

| [NEW!] | Two papers accepted to TMLR 2024! |

| [NEW!] | Two papers accepted to ECCV 2024! |

| [NEW!] | Two papers accepted to ACL 2024! |

| [NEW!] | Two papers accepted to NAACL 2024! |

| [NEW!] | Invited talk at Stanford University, Ohio State University, and University of Waterloo. |

| [NEW!] | Serving as Area Chair for ICLR 2025, NeurIPS 2024, and COLM 2024. |

| [NEW!] | Received a research grant from Microsoft. Thanks Microsoft! |

| [NEW!] | Received a gift award from Adobe. Thanks Adobe! |

| [NEW!] | Received multiple gift awards from eBay and Snap. Thanks eBay and Snap! |

| [NEW!] | Two workshops are accepted to ACL 2024! Will be co-organizing the 3rd Workshop on Advances in Language and Vision Research (ALVR 2024) and the Fourth International Combined Workshop on Spatial Language Understanding and Grounded Communication for Robotics (SpLU-RoboNLP 2024) in Bangkok, Thailand. |

| [NEW!] | Invited talk at Yale University (10/2023). |

| [NEW!] | Three papers accepted to NeurIPS 2023! Congratulations to all authors! |

| [NEW!] | Three papers accepted to EMNLP 2023! Congratulations to all authors! |

| [NEW!] | Our Athena team was awarded Second Place (Science Innovation Winner, $50,000) in the Alexa Prize SocialBot Grand Challenge 5! |

| [NEW!] | Serving as Area Chair for ICLR 2024. |

| [NEW!] | Our SlugJARVIS team won Third Place ($50,000) in the inaugural Alexa Prize SimBot Challenge! NEWS COVERAGE |

| [NEW!] | Two papers on (1) Ariel Vision-and-Dialog Navigation and (2) Text-to-Image Association Test are accepted to ACL 2023! |

| [NEW!] | Our ESC paper is accepted to ICML 2023! |

| [NEW!] | Our SlugJARVIS team advances to the finals of the inaugural Alexa Prize SimBot Challenge! Check out Amazon News for more information about this and UCSC News for our three teams of all three Alexa Prize Challenges! |

| [NEW!] | Co-organizing the 5th Workshop on Closing the Loop Between Vision and Language (CLVL) at ICCV 2023. |

| [NEW!] | Serving as Area Chair for NeurIPS 2023. |

| [NEW!] | Invited talk at the CVPR 2023 VizWiz Grand Challenge Workshop (06/2023). |

| [NEW!] | Invited talk at Google Research and UCI (3/2023). |

| [NEW!] | Invited talk at KAUST and USC (2/2023). |

| [NEW!] | Two papers on (1) Training-Free Structured Diffusion Guidance and (2) Neuro-Symbolic Procedural Planning with Commonsense Prompting (Spotlight) are accepted to ICLR 2023! |

| [NEW!] | Three papers on (1) Multimodal Graph Transformer, (2) Imagination-Based Automatic Evaluation, and (3) Imagination-Guided Open-Ended Text Generation are accepted to EACL 2023! |

| [NEW!] | Co-organizing the Workshop on Spatial Language Understanding and Grounded Communication for Robotics (SpLU-RoboNLP) at EMNLP 2023. |

| [NEW!] | Serving as Area Chair for ACL 2023, ICLR 2023, and EMNLP 2022. |

| [NEW!] | Our Sage team received an Amazon Alexa Prize Award to work on Alexa Prize TaskBot Challenge 2. Thanks Amazon! |

| [NEW!] | Our paper "Parameter-Effcient Model Adaptation for Vision Transformers" is accepted to AAAI 2023! |

| [NEW!] | Our Athena team received an Amazon Alexa Prize Award to work on Alexa Prize SocialBot Grand Challenge 5. Thanks Amazon! |

| [NEW!] | Our paper "CPL: Counterfactual Prompt Learning for Vision and Language Models" is accepted to EMNLP 2022! |

| [NEW!] | Our VLMBench paper is accepted to NeurIPS 2022 (Datasets and Benchmarks)! Check out the new compositional benchmark for vision-and-language robotic manipulation HERE! |

| [NEW!] | Invited talk at Adobe Research (08/2022). |

| [NEW!] | Our papers on (1) Privacy-preserving Federated Vision-and-Language Navigation and (2) Language-guided Artistic Style Transfer are accepted to ECCV 2022! |

| [NEW!] | Our SlugJARVIS team won the Alexa Prize SimBot Public Benchmark Challenge! link |

| [NEW!] | Our paper on Understanding Instance-Level Impact of Fairness Constraints accepted to ICML 2022! |

| [NEW!] | Two papers accepted to NAACL 2022 as Oral presentations! Topics include (1) Imagination-Augmented Natural Language Understanding and (2) Diagnosing Vision-and-Language Navigation. |

| [NEW!] | Invited talk at Fudan University (03/2022). |

| [NEW!] | Two papers accepted to CVPR 2022! Topics include (1) Compositional Temporal Grounding and (2) Language-based Video Editing. |

| [NEW!] | Three papers accepted to ACL 2022! Topics include (1) Vision-and-Language Navigation Survey, (2) Multilingual Fairness, and (3) Interpretable Research Replication Prediction. |

| [NEW!] | We have received a Google Faculty Research Award. Thanks Google! |

| [NEW!] | Invited speaker at the CVPR 2022 Workshop on Open-Domain Retrieval Under a Multi-Modal Setting. |

| [NEW!] | Invited talk at USC ISI (02/2022). |

| [NEW!] | Our SlugJARVIS team received an Amazon Alexa Prize Award to work on Alexa Prize SimBot Challenge. Thanks Amazon! |

| [NEW!] | Serving as Area Chair for ACL 2022 and NAACL 2022. |

| [NEW!] | We have received AAII Interdisciplinary Research Award. |

| [NEW!] | Invited talk at Microsoft Research (11/2021). |

| [NEW!] | Our paper on Mitigating Gender Bias in Image Search is accepted to EMNLP 2021 as an Oral paper. Congratulations Jialu! |

| [NEW!] | Invited talk at Stanford Vision Lab (10/2021). |

| [NEW!] | Received Google Cloud Research Credits. |

| [NEW!] | Our VALUE paper is accepted to NeurIPS 2021 (Datasets and Benchmarks). Congratulations to all the authors! |

| [NEW!] | Serving as Senior Program Committee (SPC) for AAAI 2022 and IJCAI-ECAI 2022. |

| [NEW!] | Co-organizing the 4th Workshop on Closing the Loop Between Vision and Language (CLVL) at ICCV 2021! |

| [NEW!] | I am giving a Tutorial on "From VQA to VLN: Recent Advances in Vision-and-Language Research" at CVPR 2021! |

| [NEW!] | Co-organizing the Second Workshop on Advances in Language and Vision Research (ALVR) at NAACL 2021! |

| [NEW!] | I am giving a keynote talk at the Third Workshop on Multimodal Artificial Intelligence at NAACL 2021 on June 6th! |

| [NEW!] | Our paper on Visual Question Rewriting was accepted to SIGIR 2021! |

| [NEW!] | I am serving as Area Chair for CoNLL 2021 and NLPCC 2021. |

| [NEW!] | Invited talk at Arizona State University. |

| [NEW!] | Two papers on multimodal style transfer learning for VLN and visual comparison were accepted to EACL 2020! |

| [NEW!] | I am serving as Area Chair for NAACL 2021. |

| [NEW!] | I am serving as Senior Program Committee (SPC) for IJCAI 2021. |

| [NEW!] | Three papers were accepted to EMNLP 2020 (two conference papers and one Findings paper)! |

| [NEW!] | Two papers were accepted to ECCV 2020 (the adversarial path sampling paper was seleted as Spotlight)! |

| [NEW!] | I successfully defended my Ph.D. Dissertation Closing the Loop Between Language and Vision for Embodied Agents. Thanks to the committee and everyone who has helped me along the Ph.D. journey! |

| [NEW!] | I am serving as Area Chair and Session Chair for EMNLP 2020. |

| [NEW!] | Co-organizing the workshop on Advances in Language and Vision Research (ALVR) at ACL 2020! |

| [NEW!] | Two papers were accepted to CVPR 2020 (the REVERIE paper was selected as Oral)! |

| [03/2020] | Invited panelist at the GPU Technology Conference (GTC) 2020. |

| [11/2019] | Organizer of the workshop on Language & Vision with applications to Video Understanding at CVPR 2020. |

| [11/2019] | Organizer of the tutorial on Self-Supervised Deep Learning for NLP at AACL-IJCNLP 2020. |

| [10/2019] | Invited speaker at the ICCV 2019 Workshop on Person In Context. |

| [06/2019] | Recipient of the CVPR 2019 Best Student Paper Award. |

| [06/2019] | Co-Organizer of the workshop on Closing the Loop Between Vision and Language at ICCV 2019. |

| [06/2019] | Invited talk at Facebook AI. |

| [01/2019] | Session Chair for AAAI 2019 (natural language processing). |

Selected Awards / Honors

Cisco Faculty Research Award, 2025JPMorganChase Faculty Research Award, 2025

Best Paper Award, ICLR Agentic AI for Science Workshop, 2025

eBay Faculty Research Award, 2025

eRUPT Research Award, 2025

Cisco Faculty Research Award, 2024

Innovator of the Year Award, Finalist, UC Santa Cruz, 2024

eBay Faculty Research Award, 2024

Snap Faculty Research Award, 2024

Alexa Prize Award (SocialBot), Second Place, 2023

Alexa Prize Award (SimBot), Third Place, 2023

Alexa Prize Award (TaskBot), Finalist (Top 5), 2023

eBay Faculty Research Award, 2023

Google Faculty Research Award, 2022

AAII Interdisciplinary Research Award, 2022

Outstanding Publication Award, UC Santa Barbara, 2020

Best Student Paper Award, CVPR, 2019

Adobe Research Fellowship, Finalist, 2019

Outstanding Graduates, Zhejiang University, 2015

Top 100 Excellent Undergraduate Students of the Year, China Computer Federation, 2014

Selected Publications [Full Publications]

Preprint

|

Group-Evolving Agents: Open-Ended Self-Improvement via Experience Sharing |

|

SafeGround: Know When to Trust GUI Grounding Models via Uncertainty Calibration |

|

Reasoning Within the Mind: Dynamic Multimodal Interleaving in Latent Space |

|

SafePro: Evaluating the Safety of Professional-Level AI Agents |

|

Scaling Agents for Computer Use |

|

Constructing a 3D Town from a Single Image |

2026

|

PhyWorldBench: A Comprehensive Evaluation of Physical Realism in Text-to-Video Models |

|

Presenting a Paper is an Art: Self-Improvement Aesthetic Agents for Academic Presentations |

|

SAFER: Risk-Constrained Sample-then-Filter in Large Language Models |

|

MiniGPT-5: Interleaved Vision-and-Language Generation via Generative Vokens |

2025

|

Soft Thinking: Unlocking the Reasoning Potential of LLMs in Continuous Concept Space |

|

GRIT: Teaching MLLMs to Think with Images |

|

More Thinking, Less Seeing? Assessing Amplified Hallucination in Multimodal Reasoning Models |

|

SafeKey: Amplifying Aha-Moment Insights for Safety Reasoning |

|

Hidden in Plain Sight: Probing Implicit Reasoning in Multimodal Language Models |

|

GUI-Bee: Align GUI Action Grounding to Novel Environments via Autonomous Exploration |

|

Dynamic Evaluation for Oversensitivity in LLMs |

|

Agent S2: A Compositional Generalist-Specialist Framework for Computer Use Agents |

|

VLM4D: Towards Spatiotemporal Awareness in Vision Language Models |

|

Multimodal Inconsistency Reasoning (MMIR): A New Benchmark for Multimodal Reasoning Models |

|

Worse than Random? An Embarrassingly Simple Probing Evaluation of Large Multimodal Models in Medical VQA |

|

The Hidden Risks of Large Reasoning Models: A Safety Assessment of R1 |

|

Agent S: An Open Agentic Framework that Uses Computers Like a Human |

|

Multimodal Situational Safety |

|

MMWorld: Towards Multi-discipline Multi-faceted World Model Evaluation in Videos |

|

EditRoom: LLM-parameterized Graph Diffusion for Composable 3D Room Layout Editing |

|

LLM-Coordination: Evaluating and Analyzing Multi-Agent Coordination Abilities in Large Language Models |

2024

|

Read Anywhere Pointed: Layout-aware GUI Screen Reading with Tree-of-Lens Grounding |

|

Active Listening: Personalized Question Generation in Open-Domain Social Conversation with User Model Based Prompting |

|

Multimodal Procedural Planning via Dual Text-Image Prompting |

|

FlexEControl: Flexible and Efficient Multimodal Control for Text-to-Image Generation |

|

Discffusion: Discriminative Diffusion Models as Few-shot Vision and Language Learners |

|

SwapAnything: Enabling Arbitrary Object Swapping in Personalized Visual Editing |

|

NavGPT-2: Unleashing Navigational Reasoning Capability for Large Vision-Language Models |

|

Muffin or Chihuahua? Challenging Large Vision-Language Models with Multipanel VQA |

|

ViCor: Bridging Visual Understanding and Commonsense Reasoning with Large Language Models |

|

Navigation as Attackers Wish? Towards Building Robust Embodied Agents under Federated Learning |

|

ComCLIP: Training-Free Compositional Image and Text Matching |

2023

|

Photoswap: Personalized Subject Swapping in Images |

|

LayoutGPT: Compositional Visual Planning and Generation with Large Language Models |

|

LLMScore: Unveiling the Power of Large Language Models in Text-to-Image Synthesis Evaluation |

|

R2H: Building Multimodal Navigation Helpers that Respond to Help Requests |

|

Collaborative Generative AI: Integrating GPT-k for Efficient Editing in Text-to-Image Generation |

|

Parameter-Efficient Cross-lingual Transfer of Vision and Language Models via Translation-based Alignment |

|

ESC: Exploration with Soft Commonsense Constraints for Zero-shot Object Navigation |

|

Aerial Vision-and-Dialog Navigation |

|

T2IAT: Measuring Valence and Stereotypical Biases in Text-to-Image Generation |

|

Training-Free Structured Diffusion Guidance for Compositional Text-to-Image Synthesis |

|

Neuro-Symbolic Procedural Planning with Commonsense Prompting |

|

Multimodal Graph Transformer for Multimodal Question Answering |

|

Visualize Before You Write: Imagination-Guided Open-Ended Text Generation |

|

ImaginE: An Imagination-Based Automatic Evaluation Metric for Natural Language Generation |

|

Parameter-efficient Model Adaptation for Vision Transformers |

|

Athena 3.0: Personalized Multimodal Chatbot with Neuro-symbolic Dialogue Generators |

|

Sage: A Multimodal Knowledge Graph-based Conversational Agent for Complex Task Guidance |

|

SlugJARVIS: Multimodal Commonsense Knowledge-based Embodied AI for SimBot Challenge |

2022

|

CPL: Counterfactual Prompt Learning for Vision and Language Models |

|

VLMbench: A Compositional Benchmark for Vision-and-Language Manipulation |

|

FedVLN: Privacy-preserving Federated Vision-and-Language Navigation |

|

Language-Driven Artistic Style Transfer |

|

Understanding Instance-Level Impact of Fairness Constraints |

|

Imagination-Augmented Natural Language Understanding |

|

Diagnosing Vision-and-Language Navigation: What Really Matters |

|

Compositional Temporal Grounding with Structured Variational Cross-Graph Correspondence Learning |

|

M3L: Language-based Video Editing via Multi-Modal Multi-Level Transformer |

|

Vision-and-Language Navigation: A Survey of Tasks, Methods, and Future Directions |

|

Assessing Multilingual Fairness in Pretrained Multimodal Representations |

|

Interpretable Research Replication Prediction via Variational Contextual Consistency Sentence Masking |

2021

|

Are Gender-Neutral Queries Really Gender-Neutral? Mitigating Gender Bias in Image Search |

|

VALUE: A Multi-Task Benchmark for Video-and-Language Understanding Evaluation |

|

Visual Question Rewriting for Increasing Response Rate |

|

Multimodal Text Style Transfer for Outdoor Vision-and-Language Navigation |

|

L2C: Describing Visual Differences Needs Semantic Understanding of Individuals |

2020

|

Closing the Loop Between Language and Vision for Embodied Agents |

|

SSCR: Iterative Language-Based Image Editing via Self-Supervised Counterfactual Reasoning |

|

Towards Understanding Sample Variance in Visually Grounded Language Generation: Evaluations and Observations |

|

Learning to Stop: A Simple yet Effective Approach to Urban Vision-Language Navigation |

|

Environment-agnostic Multitask Learning for Natural Language Grounded Navigation |

|

Counterfactual Vision-and-Language Navigation via Adversarial Path Sampling |

|

Unsupervised Reinforcement Learning of Transferable Meta-Skills for Embodied Navigation |

|

REVERIE: Remote Embodied Visual Referring Expression in Real Indoor Environments |

|

Vision-Language Navigation Policy Learning and Adaptation

|

|

Generative Adversarial Zero-Shot Relational Learning for Knowledge Graphs |

2019

|

TIGEr: Text-to-Image Grounding for Image Caption Evaluation |

|

VATEX: A Large-Scale, High-Quality Multilingual Dataset for Video-and-Language Research |

|

Reinforced Cross-Modal Matching and Self-Supervised Imitation Learning for Vision-Language Navigation |

|

MAN: Moment Alignment Network for Natural Language Moment Retrieval via Iterative Graph Adjustment |

|

Self-Supervised Dialogue Learning |

|

Self-Supervised Learning for Contextualized Extractive Summarization |

|

Towards Generating Long and Coherent Text with Multi-Level Latent Variable Models |

|

Extract and Edit: An Alternative to Back-Translation for Unsupervised Neural Machine Translation |

|

Learning to Compose Topic-Aware Mixture of Experts for Zero-Shot Video Captioning |

2018 and before

|

Look Before You Leap: Bridging Model-Free and Model-Based Reinforcement Learning for Planned-Ahead Vision-and-Language Navigation |

|

XL-NBT: A Cross-lingual Neural Belief Tracking Framework |

|

No Metrics Are Perfect: Adversarial Reward Learning for Visual Storytelling |

|

S3D: Single Shot multi-Span Detector via Fully 3D Convolutional Network |

|

Video Captioning via Hierarchical Reinforcement Learning |

|

Watch, Listen, and Describe: Globally and Locally Aligned Cross-Modal Attentions for Video Captioning |

|

Multimodal Transfer: A Hierarchical Deep Convolutional Neural Network for Fast Artistic Style Transfer |

|

Deep Reinforcement Learning for Visual Object Tracking in Videos |

Products

|

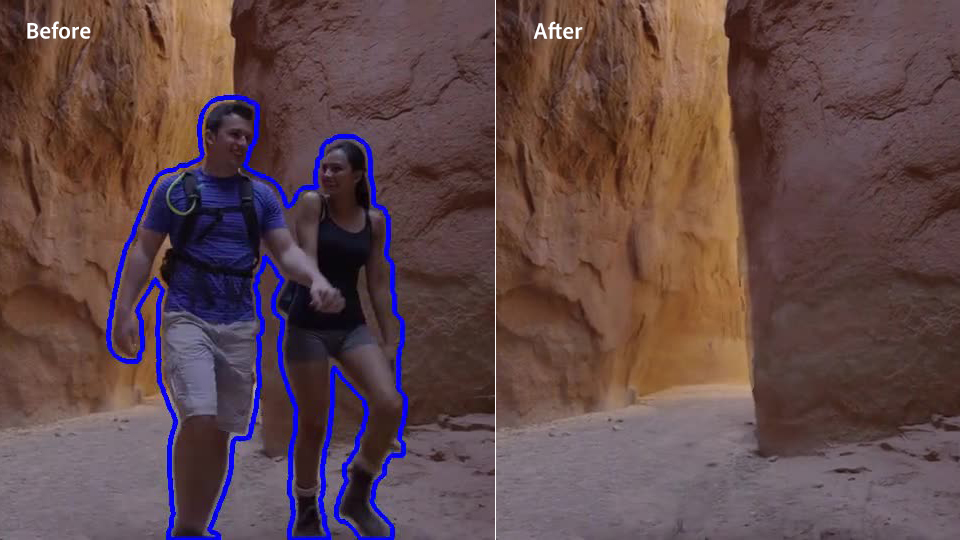

ProjectCloak: Remove Unwanted Objects in Video |

|

ArtisticEye: A Real-time Application for High-resolution Artistic Style Transfer |

Experience

|

Google Research, Mountain View, US Research Intern, Summer 2019 |

|

|

Facebook AI Research (FAIR), Menlo Park, US Graduate Researcher, Spring 2019 |

|

|

Microsoft Research AI, Redmond, US Research Intern, Summer 2018 |

|

|

Adobe Research, San Francisco, US Research Intern, Summer 2017 |

|

|

Adobe Research, San Francisco, US Research Intern, Summer 2016 |

|

|

Exacloud Inc., Hangzhou, China Software Engineer Intern, 12. 2014 - 03. 2015 |

|

|

HCI, Graphics and Computer Vision Group, HKU Research Assistant, Summer 2014 |

|

Service

- 3rd Workshop on Advances in Language and Vision Research (ALVR), ACL 2024

- 4th Workshop on Spatial Language Understanding and Grounded Communication for Robotics (SpLU-RoboNLP), ACL 2024

- 3rd Workshop on Spatial Language Understanding and Grounded Communication for Robotics (SpLU-RoboNLP), EMNLP 2023

- 5th Workshop on Closing the Loop Between Vision and Language (CLVL), ICCV 2023

- Workshop on Human Interaction for Robot Navigation, ICCV 2021

- 4th Workshop on Closing the Loop Between Vision and Language (CLVL), ICCV 2021

- From VQA to VLN: Recent Advances in Vision-and-Language Research Tutorial, CVPR 2021

- 2nd Workshop on Advances in Language and Vision Research (ALVR), NAACL 2021

- Workshop on Advances in Language and Vision Research (ALVR), ACL 2020

- Workshop on Language & Vision with applications to Video Understanding, CVPR 2020

- Tutorial on Self-Supervised Deep Learning for NLP, AACL-IJCNLP 2020

- 3rd Workshop on Closing the Loop Between Vision and Language (CLVL), ICCV 2019

- ICLR 2024

- NeurIPS 2023

- ACL 2023

- ICLR 2023

- EMNLP 2022

- NAACL 2022

- ACL 2022

- AACL-IJCNLP 2022

- IJCAI-ECAI 2022

- AAAI 2022

- CoNLL 2021

- NLPCC 2021 (Multimodality)

- NAACL 2021 (Interpretability and Analysis of Models for NLP)

- IJCAI 2021

- EMNLP 2020 (Interpretability and Analysis of Models for NLP)

- NAACL 2021

- EMNLP 2020 (Language Grounding to Vision, Robotics and Beyond)

- AAAI 2019 (Natural Language Processing)